Learning to Anonymize Faces for

Privacy Preserving Action Detection

|

|

|

|

|

|

|

|

|

European Conference on Computer Vision (ECCV), 2018.

Abstract

There is an increasing concern in computer vision devices invading the privacy of their users. We want the camera systems/robots to recognize important events and assist human daily life by understanding its videos, but we also want to ensure that they do not intrude people's privacy. In this paper, we propose a new principled approach for learning a video anonymizer. We use an adversarial training setting in which two competing systems fight: (1) a video anonymizer that modifies the original video to remove privacy-sensitive information (i.e., human face) while still trying to maximize spatial action detection performance, and (2) a discriminator that tries to extract privacy-sensitive information from such anonymized videos. The end goal is for the video anonymizer to perform a pixel-level modification of video frames to anonymize each person's face, while minimizing the effect on action detection performance. We experimentally confirm the benefit of our approach particularly compared to conventional hand-crafted video/face anonymization methods including masking, blurring, and noise adding.

|

|

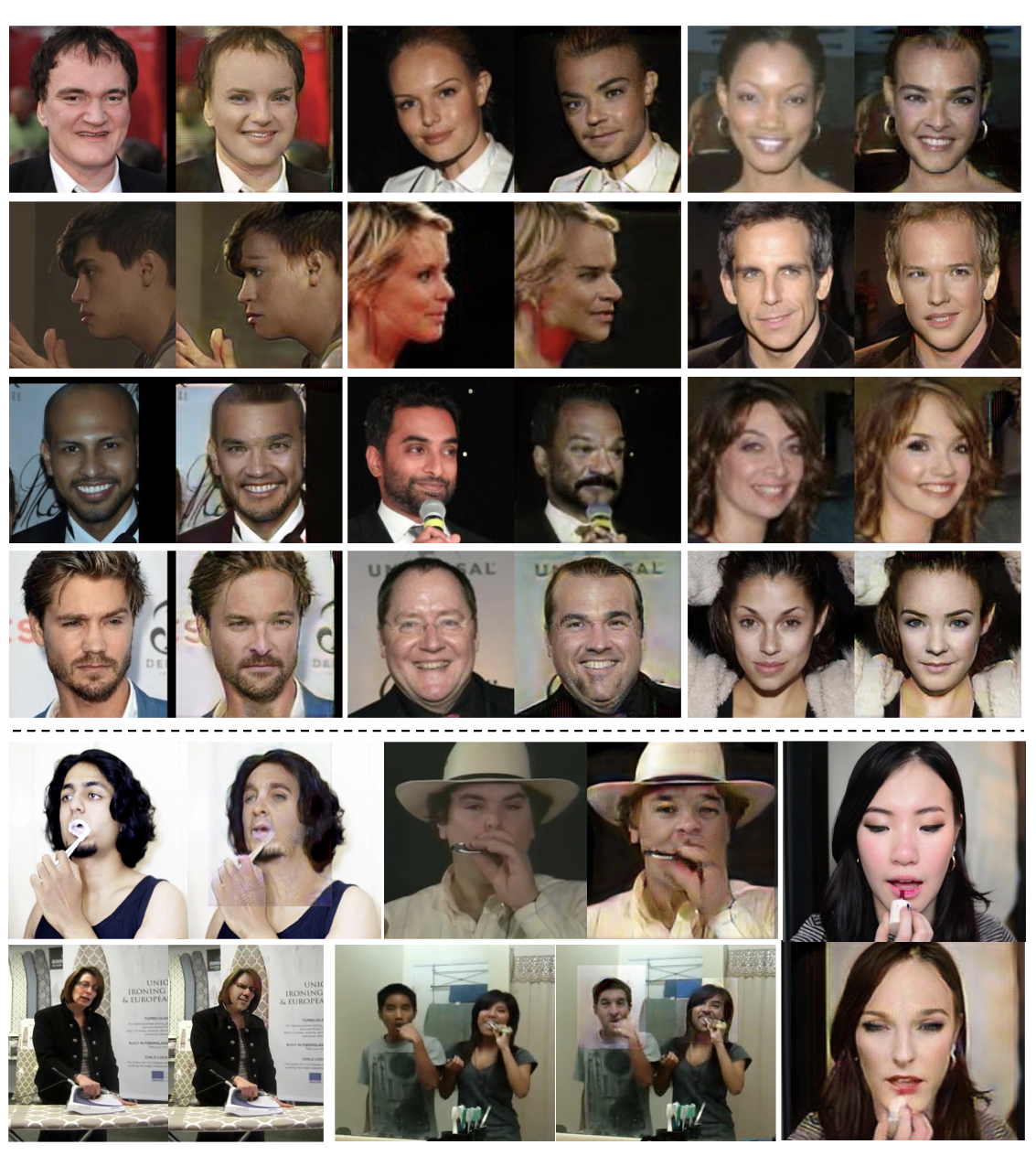

The picture on the left of each pair is the original image, and the one on the right is the modified image. The first four rows are from the face dataset (CASIA); the bottom two are from the video dataset (DALY). |

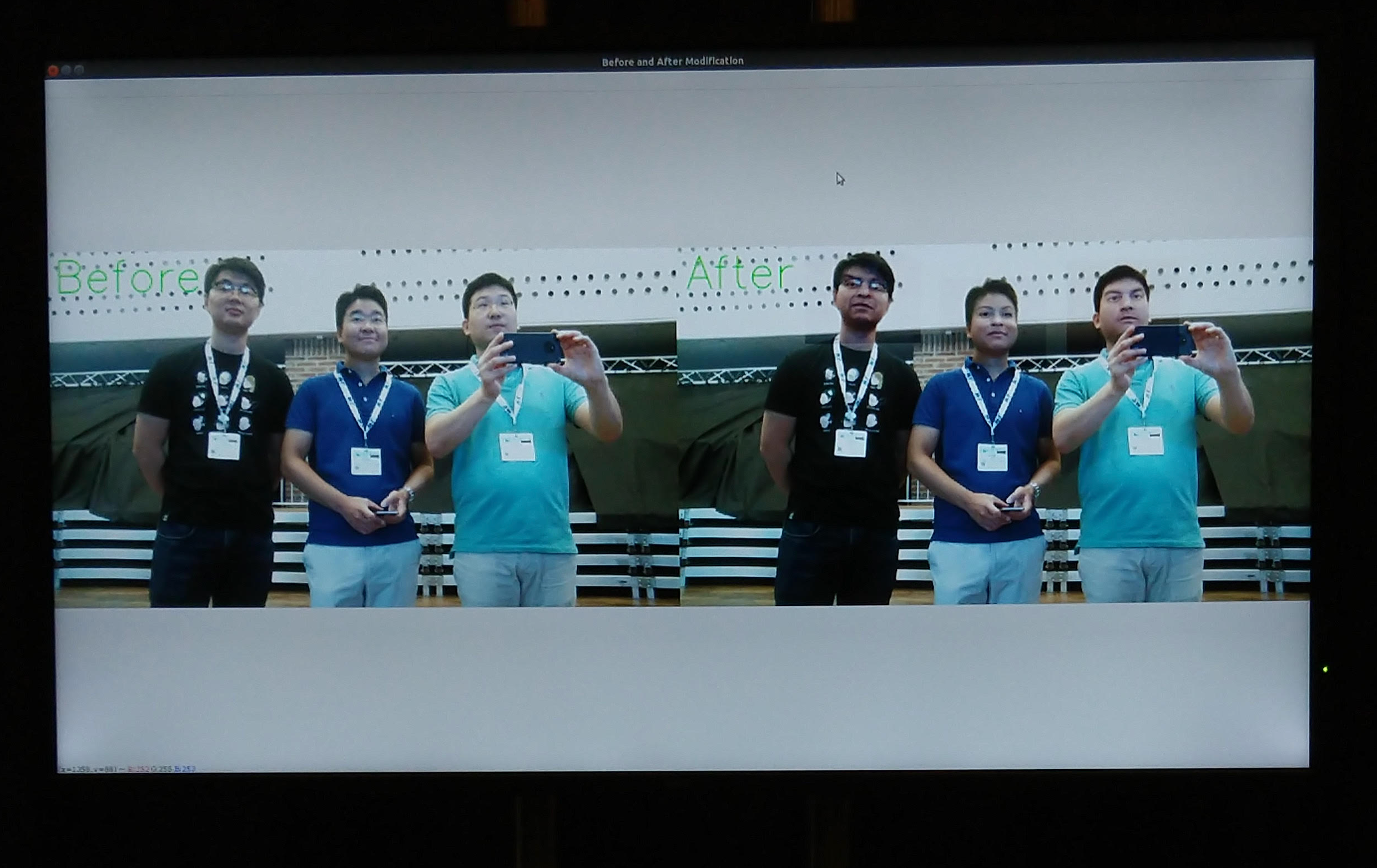

ECCV DEMO

Activity-Preserving Face Anonymization for Privacy Protection.

Zhongzheng Ren, Yong Jae Lee, Hyun Jong Yang, Michael S. Ryoo.

ECCV Demo Session, Munich, Germany, 2018

Materials

Talk

Code

Related Work

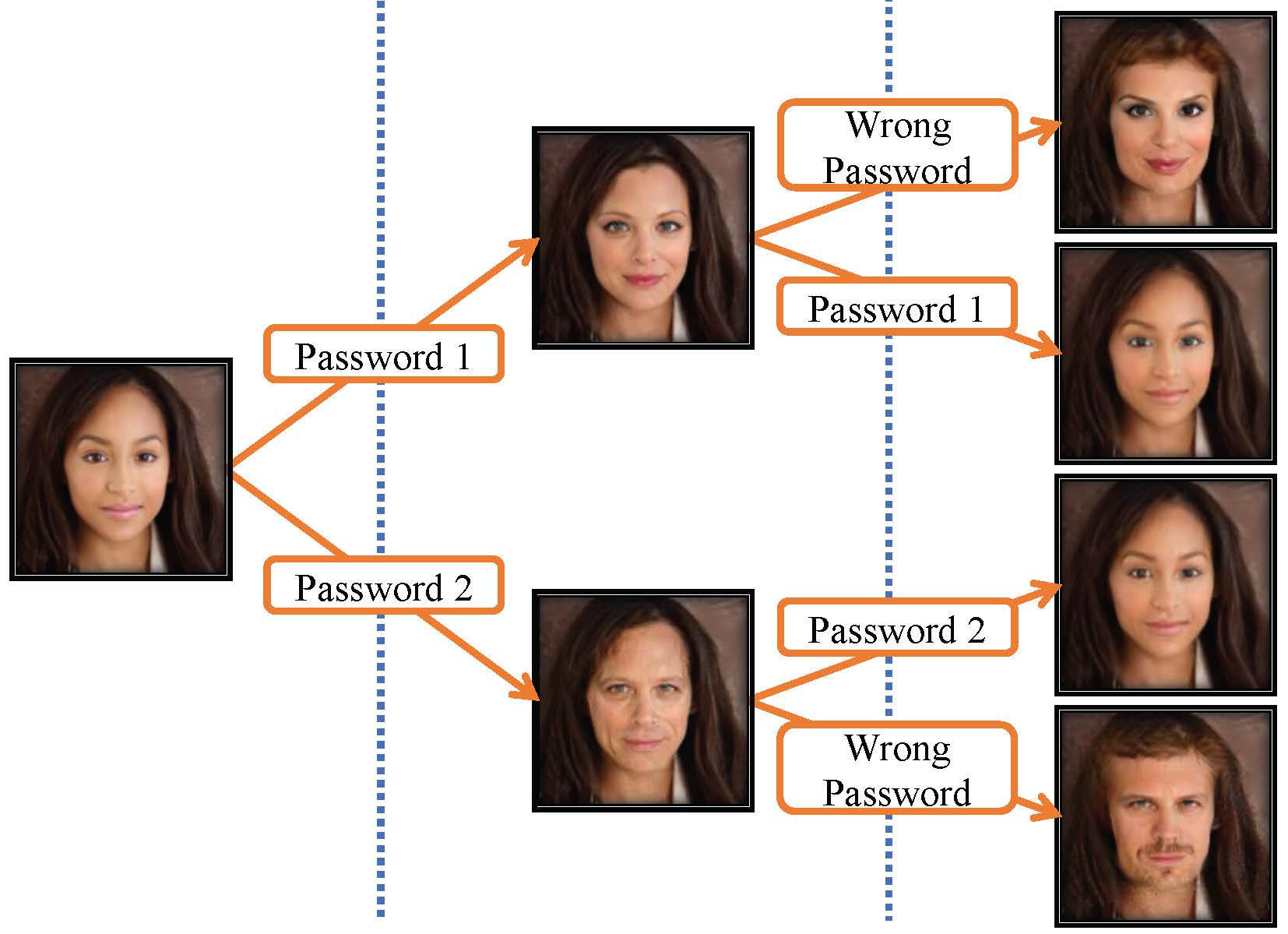

Password-conditioned Anonymization and Deanonymization with Face Identity Transformers.

Xiuye Gu, Weixin Luo, Michael S. Ryoo, Yong Jae Lee. arXiv:1911.11759

Acknowledgments

This research was conducted as a part of EgoVid Inc.'s research activity on privacy-preserving computer vision. This work was supported by the Technology development Program (S2557960) funded by the Ministry of SMEs and Startups (MSS, Korea). We thank all the subjects who participated in our user study. We also thank Chongruo Wu, Fanyi Xiao, Krishna Kumar Singh, and Maheen Rashid for their valuable discussions on this work.

Comments, questions to Jason Ren